Using Priority Sliders to help create a team vision of Quality

We software people are pretty passionate about what we do and some of us are quite opinionated (guilty as charged). When it comes to building software, each of us brings our own unique perspective and when you put all those perspectives and opinions and egos and biases into the mix, you can end up with something pretty special of high quality.

Quality is often defined as “value to somebody” or “value to somebody who matters” and as per the Modern Testing Principles, the only true judge of quality is the customer but if we can’t speak directly to that “somebody who matters” or to a customer and if we are trying to encourage a whole team approach to quality, how do we agree on what quality looks like?

One approach that we use quite often to form a common view of the world is called “Priority Sliders”, an open practice library (https://openpracticelibrary.com) that facilitates conversations about relative priorities and activities.

We work with the team, including the Product Owner to set the scene before starting off with an example of a quality attribute. We then ask the team to provide other quality attributes that they believe are important given their particular context.

We might end up with something like this:

Now, while these are really great attributes, they are still pretty much open to interpretation; for example does Security mean we need to perform threat analysis and pen test or does it mean something else?

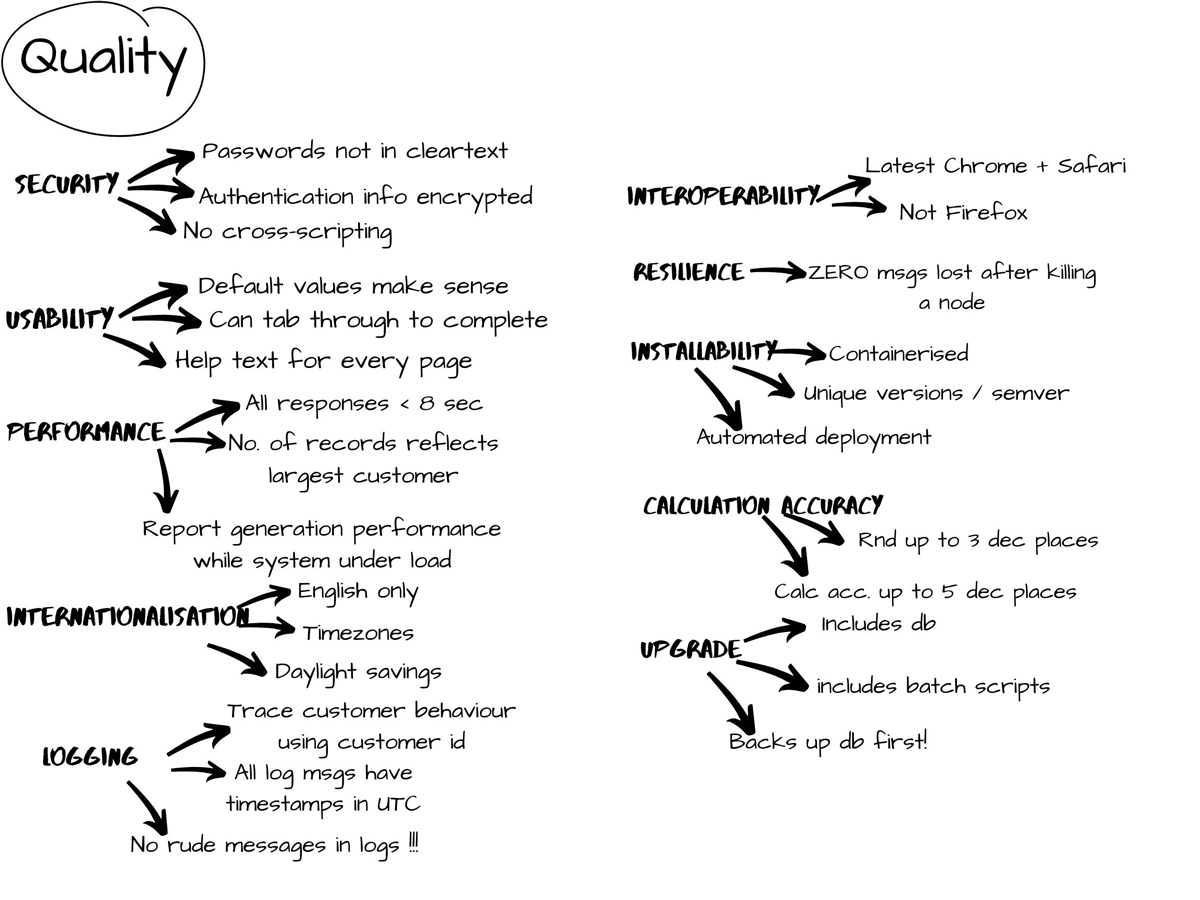

It’s worth spending more time clarifying what we mean to avoid unnecessary arguments later and we can do that by using examples (that are meaningful to our context).

The examples are a really useful way to set expectations as well as starting conversations about the overall approach. This is by no means a definitive list and the list will evolve but we have to start somewhere…

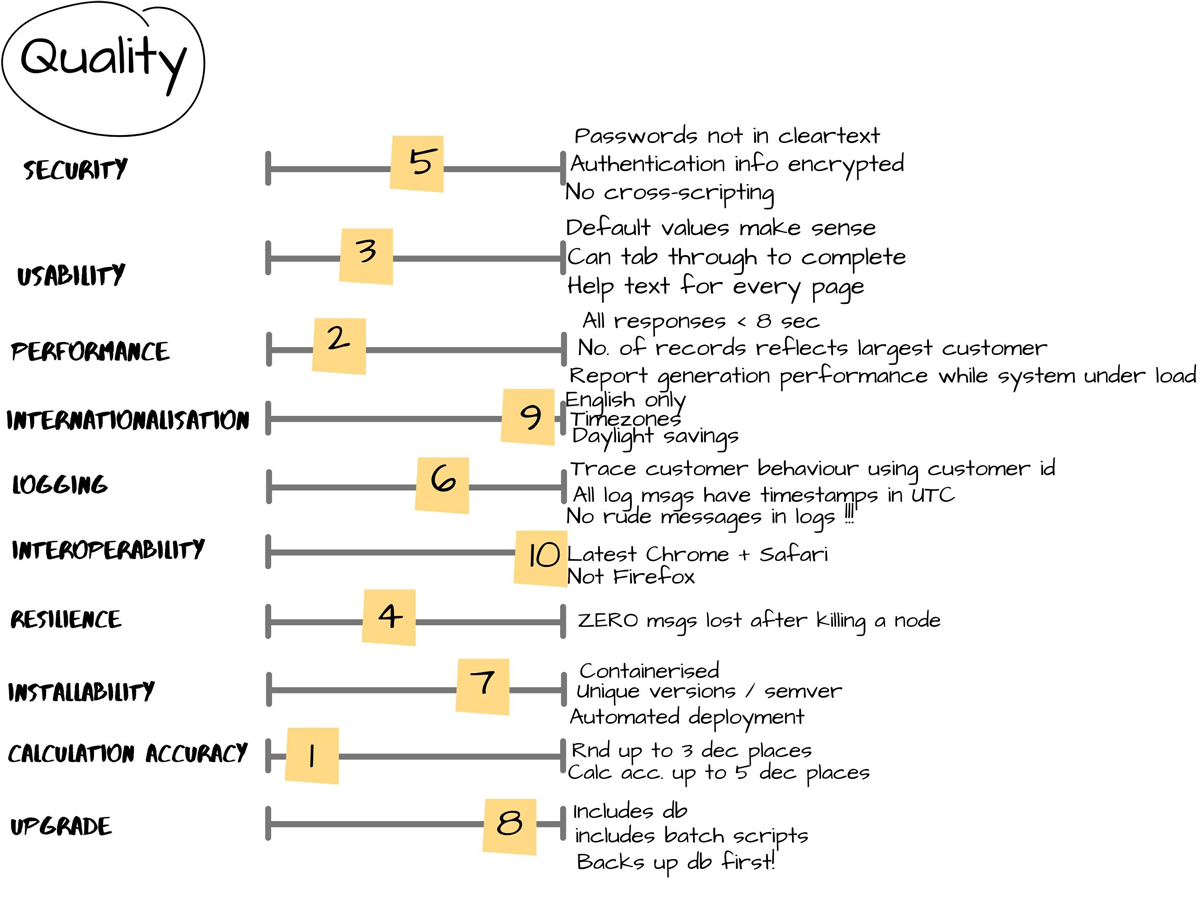

Next step is to prioritise the attributes.

We do this by asking which attribute the team considers to be the most important i.e. If you have only one hour to test something, what would you focus on?

This may start a debate and that’s perfectly fine but the Product Owner has the final say.

We go through each attribute in the same way assigning a unique number to each and as we work through them, we may add additional items to our list of examples or we may add new attributes to our list

(1 = most important attribute, 10 = least important attribute).

If there are some attributes that we feel are not worth spending time on (especially ones that have caused arguments in the past) then we would still note them down but give them a really high value.

We end up with what the team agrees are the top 10 priorities in terms of the quality attributes for a particular product and we can present them in terms of a sliding scale (hence the name).

We’ve found this to be useful not just to get a consensus on what quality means for the team but these attributes also help to drive out architectural decisions for the solution itself.

Given that they’re driven by the Product Owner, they also provide a way for us to formulate the strategic themes that make our product offering unique from that of our competitors and while the list of attributes isn’t 100% complete, it still helps to have a discussion up-front while we still have time to think before becoming ingrained in the build and test process (and the accompanying flurry of activity).